I’ve been using dokku to host the majority of my personal

projects for the last few years. It’s a delightful little self-hosted Platform as a Service,

offering a Heroku-like workflow with all the flexibility of hosting on your own hardware. I’ve

been running many dokku apps on a single Linode instance. The workflow

of simply typing

I’ve been using dokku to host the majority of my personal

projects for the last few years. It’s a delightful little self-hosted Platform as a Service,

offering a Heroku-like workflow with all the flexibility of hosting on your own hardware. I’ve

been running many dokku apps on a single Linode instance. The workflow

of simply typing git push dokku master to deploy to production is wonderful.

why change?

Over time, however, the need to tend to that linux box like a pet - to keep the security patches up to date and respond to periodic scheduled maintenance has become a bit of a pain. When I saw the announcement of Google Cloud Run this week, along with what looks like a generous free pricing tier, I decided to see if it might be a nice replacement. Here’s a quick HOWTO if you’d like to try for yourself.

dokku workflow

dokku presents an interface which is more or less a clone of the Heroku CLI. It uses Heroku’s

various language specific Docker buildpacks behind the scenes and auto-detects the language for each

project, installs dependencies, reads config from a Procfile and injects secrets / per-instance configuration

via environment variables. I wanted as close as possible a mirror of this developer experience on Cloud Run.

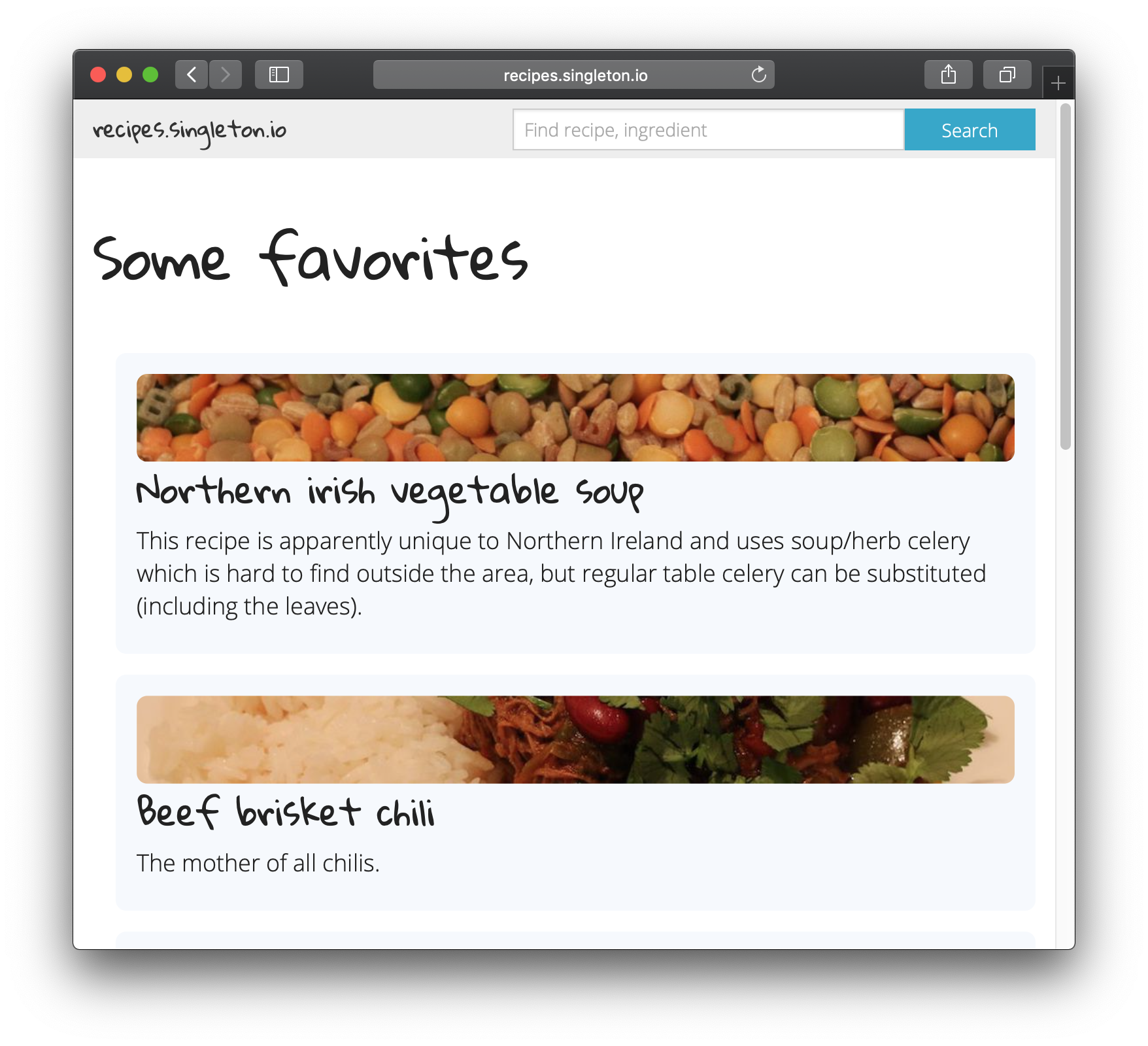

I have mostly been running python apps with dokku so decided to migrate a really simple one - a little recipe

site I often use for proofs of concept in new languages or tools. You can find the source code on github.

For python projects, dokku mostly cares about two config files:

requirements.txt Which defines the dependencies of the app. These get automatically installed by pip when you push an update

== requirements.txt ==

...

Flask==1.0.2

futures==3.2.0

gunicorn==19.9.0

...

and Procfile which defines how each process which is part of your app gets run. recipes has just one process, but

some of the apps I run define several services. Google Cloud Run is only viable for services which are fully stateless

(recipes is, it reads all its state from a github repo and caches it in a redis instance). You’ll probably only find Cloud Run useful if you have a pretty simple Procfile like the one below.

== Procfile ==

web: gunicorn --bind :$PORT --workers 1 --threads 8 app:app

One critical thing to note here is that both dokku and Google Cloud Run dynamically assign a port you must serve on to handle user traffic and stuff it into the PORT environment variable.

Google Cloud Run

Google Cloud Run allows you to run stateless containers that are invocable via HTTP requests. Your app defines how to build a container image with a Dockerfile. Here’s a Dockerfile which uses a python base image and installs dependencies with pip.

== Dockerfile ==

FROM python:2.7

# Copy local code to the container image.

ENV APP_HOME /app

WORKDIR $APP_HOME

COPY . .

RUN pip install -r requirements.txt

CMD exec honcho start

The final line runs honcho each time the container is started. Honcho is a python port of David Dollar’s Foreman - a command-line application which helps you manage and run Procfile-based applications. honcho must be added to requirements.txt (pip install honcho; pip freeze > requirements.txt) but then enables the same Procfile based configuration I like.

Cloud Run’s quickstart provides fairly easy to follow instructions for the next steps.

First, we build a docker image based on the Dockerfile.

$ gcloud builds submit --tag gcr.io/$PROJECT/recipefe

Creating temporary tarball archive of 46 file(s) totalling 582.1 KiB before compression.

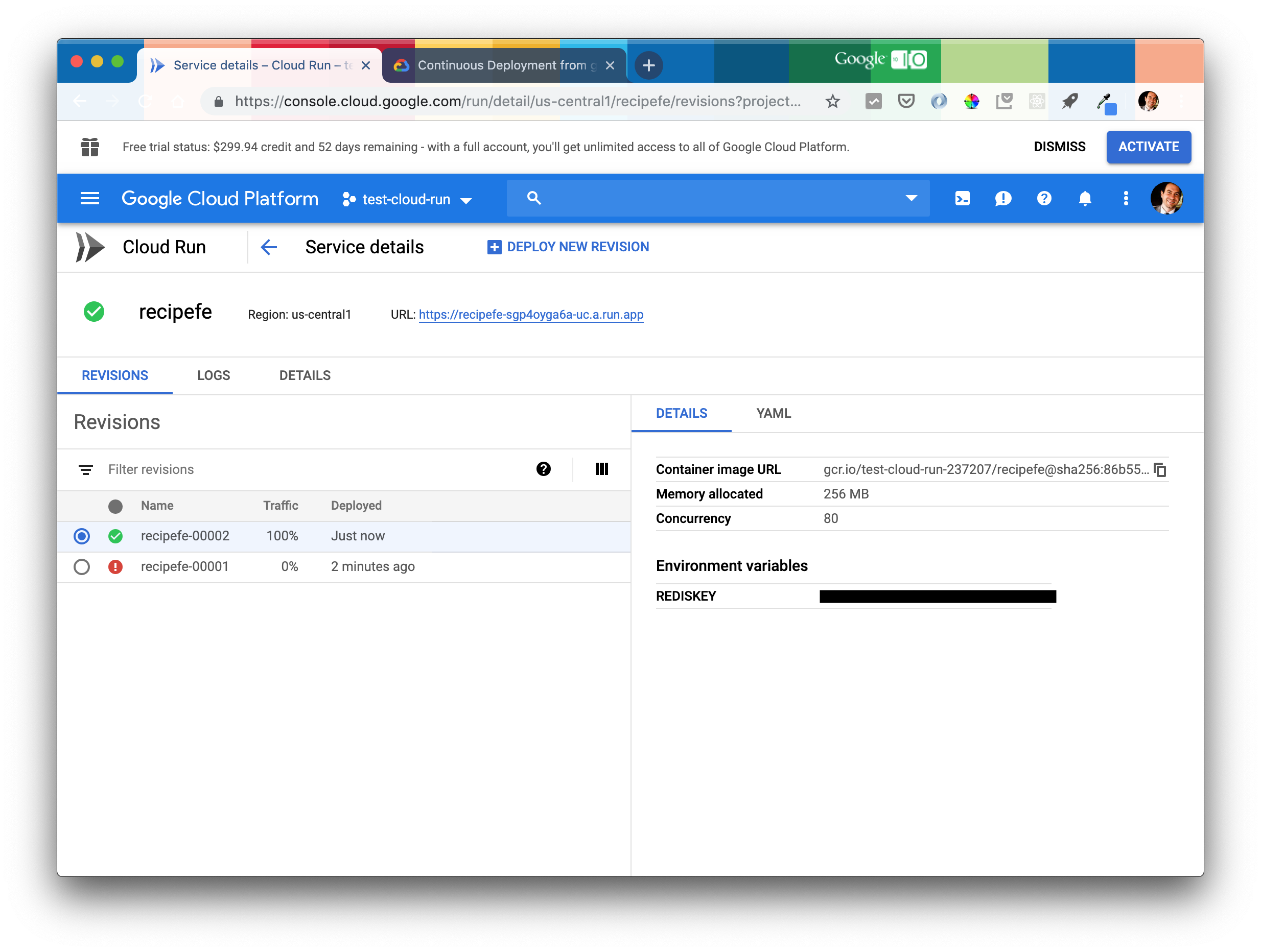

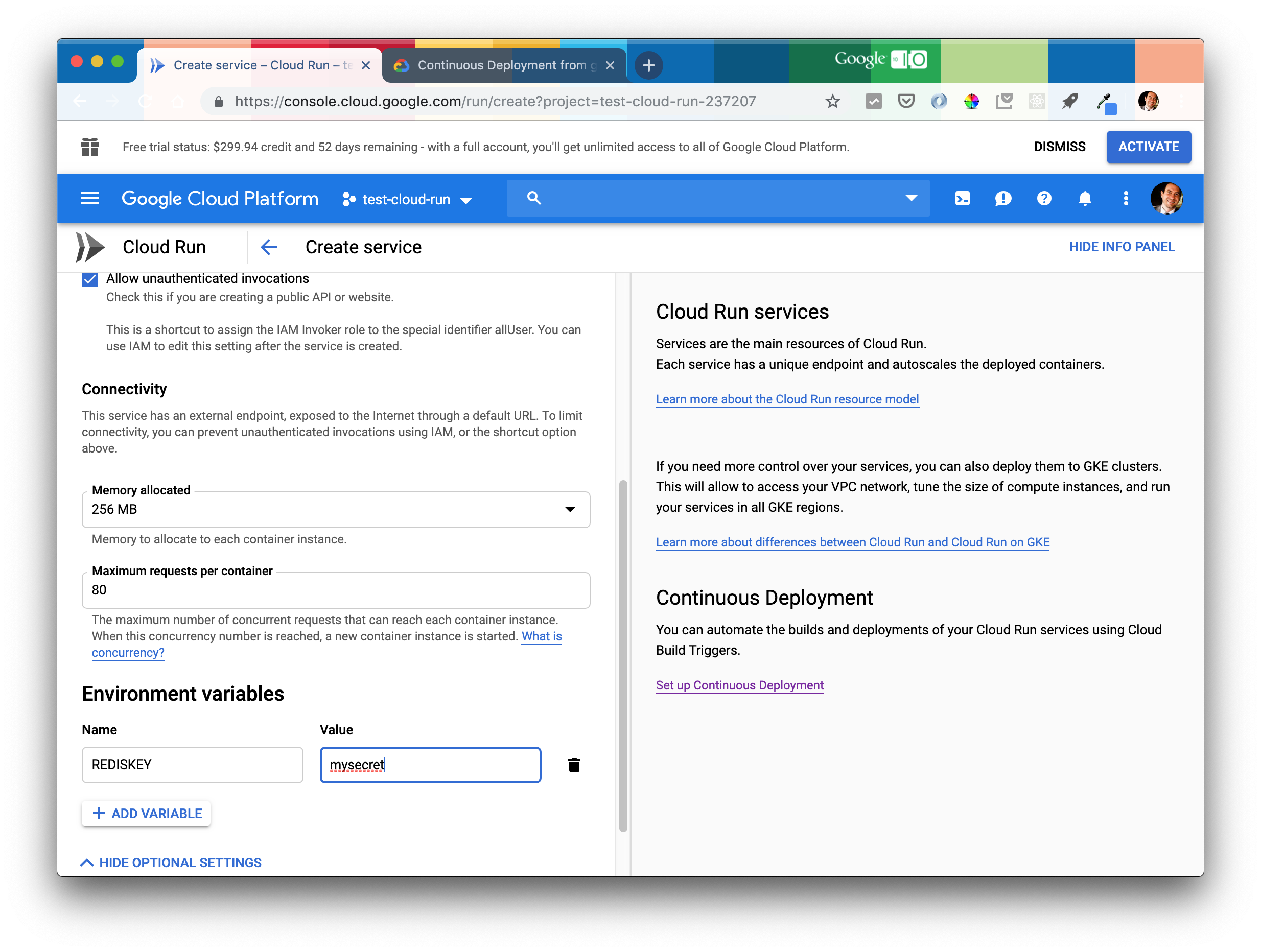

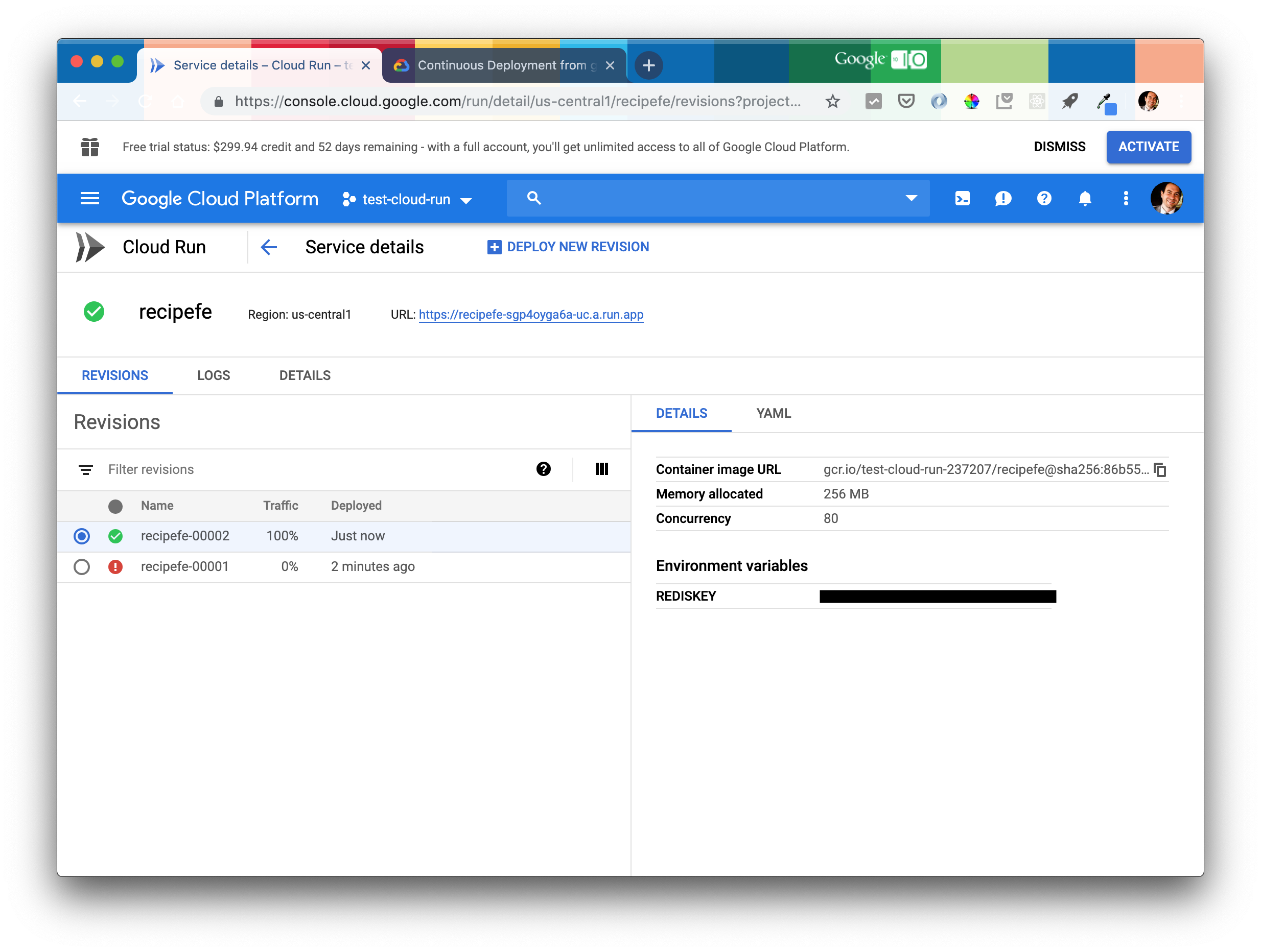

Next, create a new service with the Cloud Run console. Note that “allow unauthenticated invocations” must be selected.

Secrets (in the case of recipes I needed to keep the key for redis secret) are configured in the console and get passed in as environment variables.

Then deploy that build:

$ gcloud beta run deploy --image gcr.io/$PROJECT/recipefe

Please specify a region:

[1] us-central1

[2] cancel

Please enter your numeric choice: 1

To make this the default region, run `gcloud config set run/region us-central1`.

Service name: (recipefe):

Deploying container to Cloud Run service [recipefe] in project [redacted] region [us-central1]

✓ Deploying... Done.

✓ Creating Revision...

✓ Routing traffic...

Done.

Service [recipefe] revision [recipefe-00002] has been deployed and is serving traffic at https://recipefe-sgp4oyga6a-uc.a.run.app

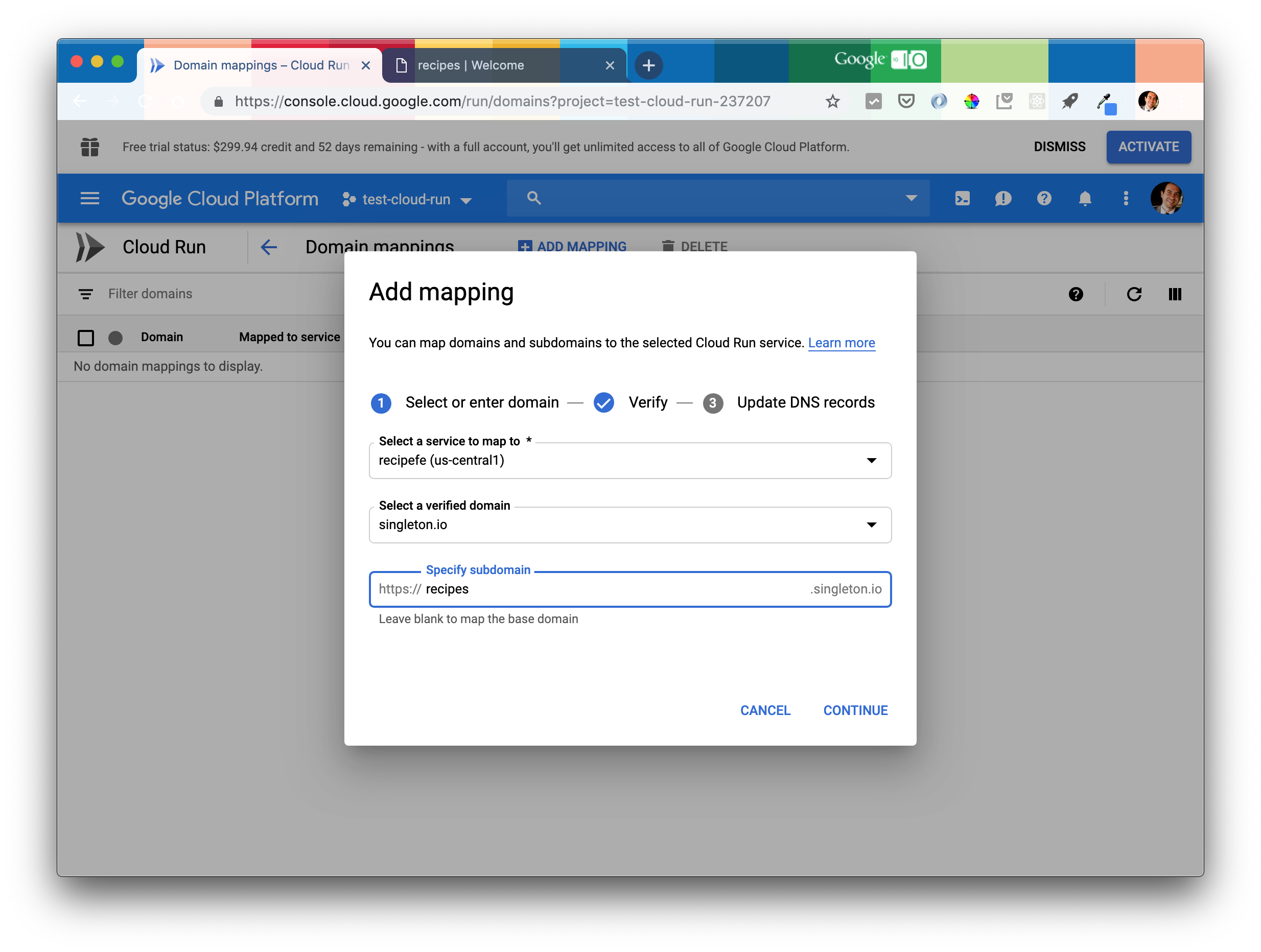

You should now be able to successfully access your app at the URL generated by Cloud Run. Next, let’s provision a custom domain. I happen to host singleton.io on Google Domains and that makes this process very simple indeed - the base domain was pre-verified and the step by step wizard provided in the console helped provision the domain and automatically set up SSL certificates.

Success

🎉 https://recipes.singleton.io/ is live.

deploy with git push

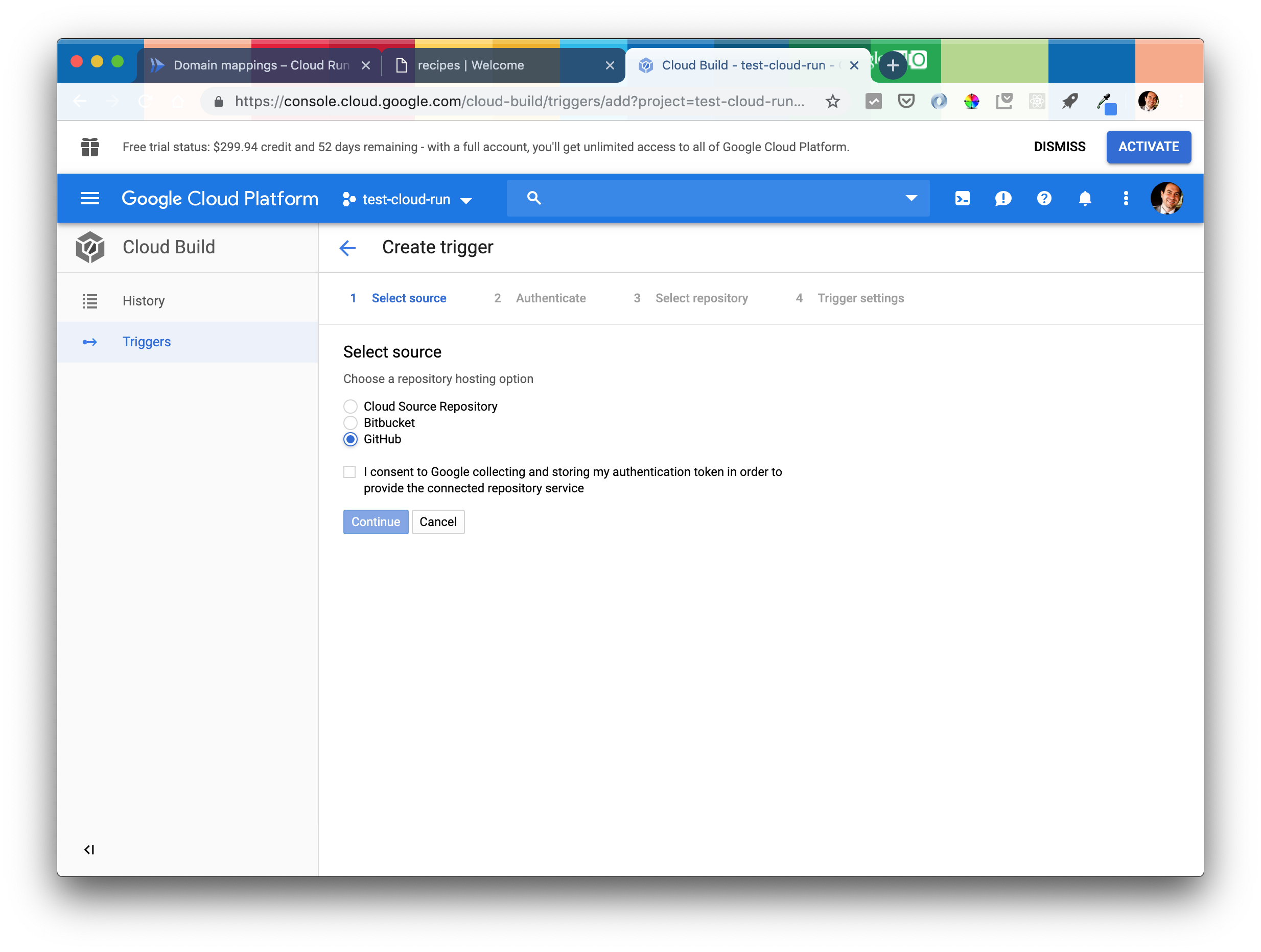

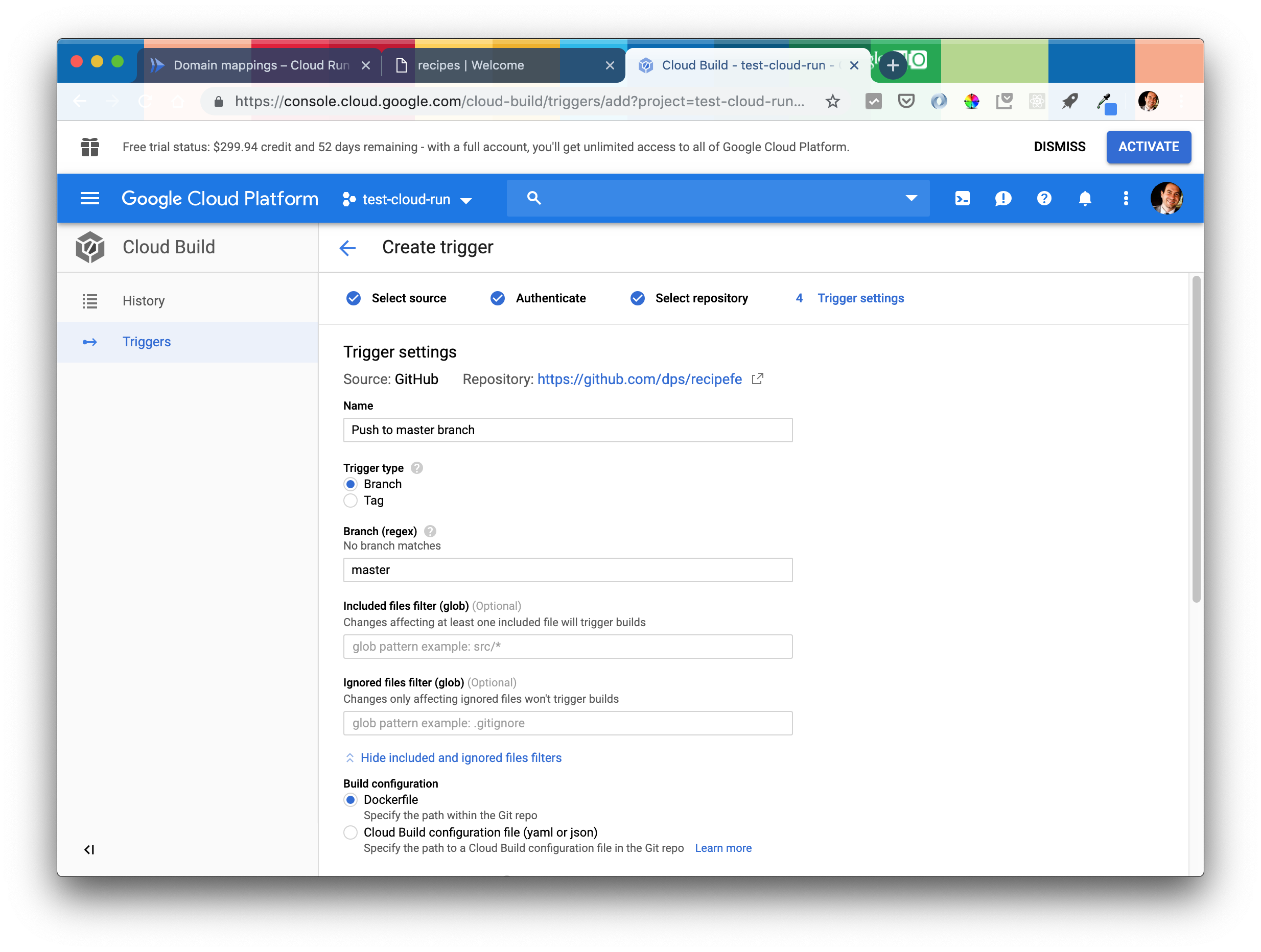

The final thing to do to replicate the git push production master workflow is to set up continuous deployment with Cloud Build. The Google documentation for this is good and the flow in the console easy to complete.

I connected a Cloud Build trigger to my existing

I connected a Cloud Build trigger to my existing github repo. You can also host your git repo on Cloud Build - it provides a similar free tier to Github.

The only gotcha is that you must grant the “Cloud Run Admin” and “Service Account User” roles to the Cloud Build service account which involves visiting the separate IAM and Admin page of the GCP console.

Once connected, a cloudbuild.yaml file needs to be added to the repo to tell Cloud Build how to build and deploy the service.

== cloudbuild.yaml ==

steps:

# build the container image

- name: 'gcr.io/cloud-builders/docker'

args: ['build', '-t', 'gcr.io/$PROJECT_ID/recipefe', '.']

# push the container image to Container Registry

- name: 'gcr.io/cloud-builders/docker'

args: ['push', 'gcr.io/$PROJECT_ID/recipefe']

# Deploy container image to Cloud Run

- name: 'gcr.io/cloud-builders/gcloud'

args: ['beta', 'run', 'deploy', 'recipefe', '--image', 'gcr.io/$PROJECT_ID/recipefe', '--region', 'us-central1']

images:

- gcr.io/$PROJECT_ID/recipefe

That’s it! Now you can make local changes, test them on your machine and then commit to your git repo – git push [github] master will kick off a Cloud Build and deploy to Cloud Run.

So, how is it?

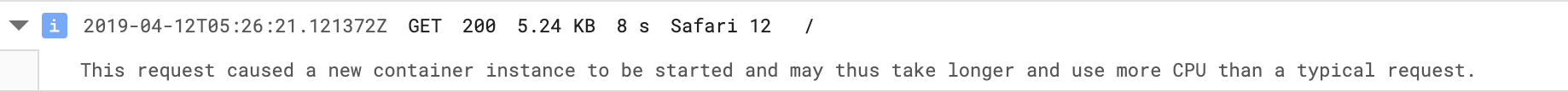

I like this developer workflow, and the serverless setup means no more tending to my underlying linux box, but does Cloud Run live up to the “Fast autoscaling”, even from zero promise? Cloud Build’s development tips provide a nice overview of how services get suspended between requests and how to optimize “cold starts”. When there hasn’t been a request for a long time and the whole container has to be reprovisioned and spun up and that’s a “cold start”.

I’ve typically been seeing cold starts take ~8 seconds with this simple service. That is at the very outside of what I think is acceptable, but it’s also been quite impressive to see that the service remains warm with even an hour between requests.

autoscaling

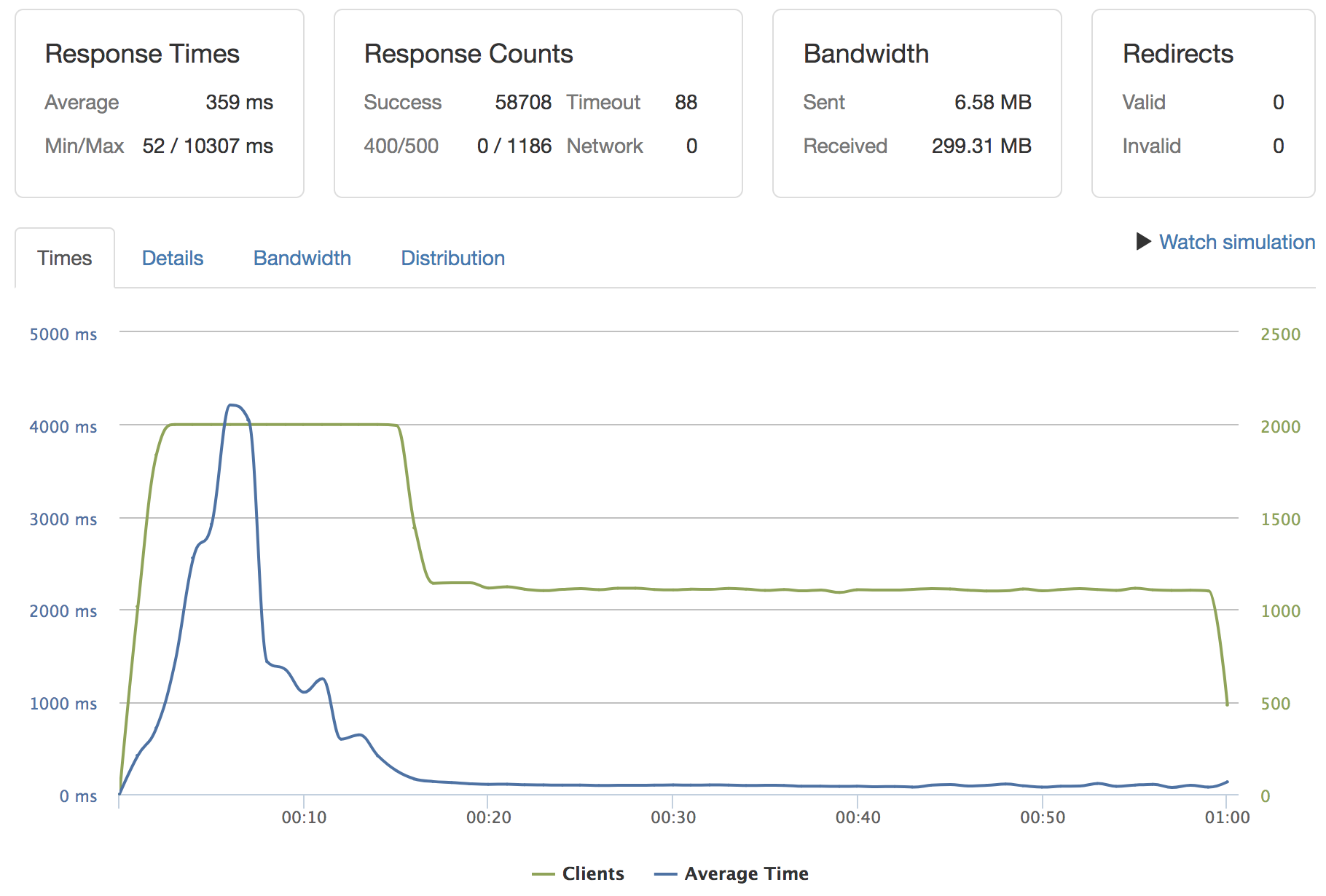

Part of the magic of Cloud Run is that the system scales up the number of containers running to handle your traffic, even when it spikes very quickly. I can confirm this works well. You do have to tell Cloud Run explicitly the maximum number of concurrent requests that should reach each container instance. When this concurrency number is reached, a new container instance is started. To experiment with this, I cranked the default of 80 concurrent requests down to 8 and ran a load test with loader.io. In the screenshot below you can see the system handling 1000 requests per second for a full minute. There’s a spike in latency towards the beginning as the system scales up, but it then takes that traffic in its stride.

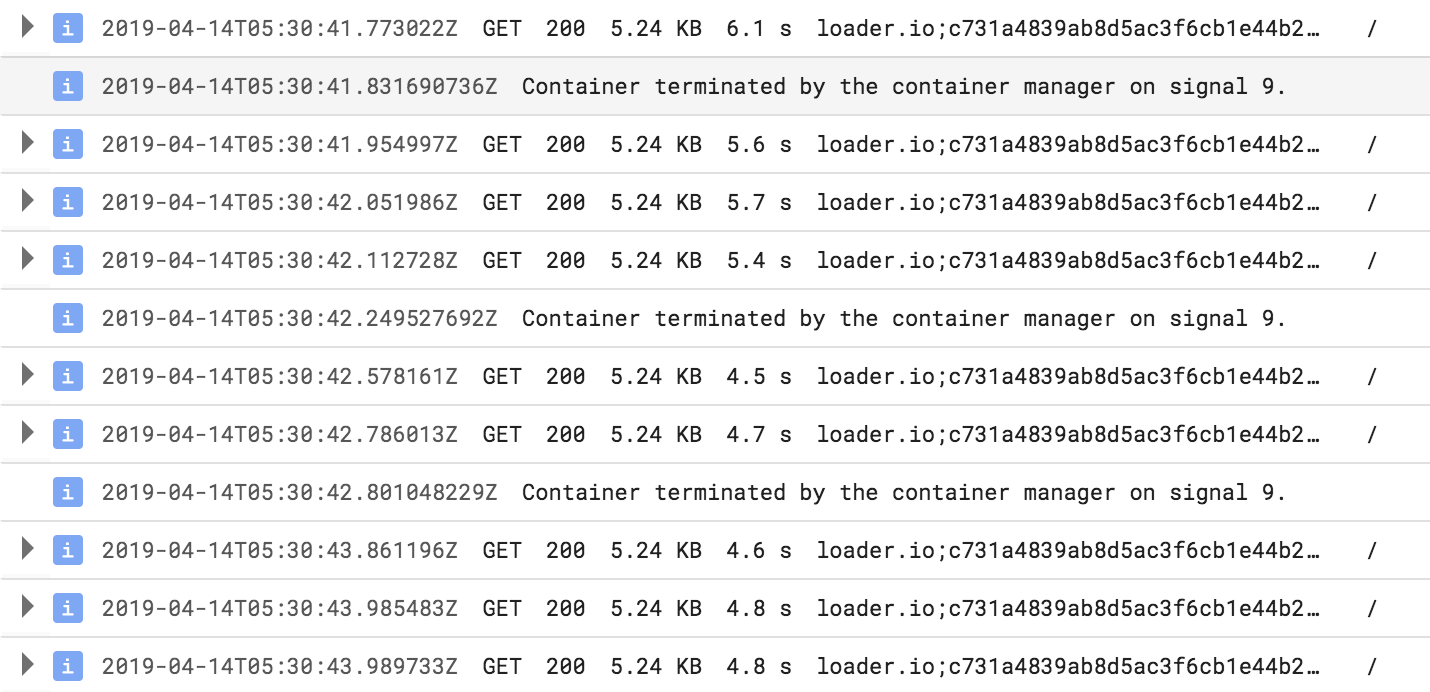

Interestingly, the container cold start times get shorter during this heavy load period - it typically takes 8 seconds to spin up a new container, but during this time those initial requests saw Cloud Run handling times in the ~5 second range for cold starts.

Interestingly, the container cold start times get shorter during this heavy load period - it typically takes 8 seconds to spin up a new container, but during this time those initial requests saw Cloud Run handling times in the ~5 second range for cold starts.

The biggest surprise in all this is that the Cloud Run console doesn’t provide more stats or visualization of what’s going on - I had to reconstruct the number of containers running and the start latencies etc from request level logs.

Next steps

I plan to keep monitoring this app’s performance over the next few days before moving any more services over.