As you’ll know if you follow me on Twitter, I’ve been kindof obsessed with the text-to-image GPT models OpenAI released as DALL-E recently. I’ve enjoyed generating art and even collaborating with DALL-E to riff on paintings I’ve made myself. Therefore, when stable diffusion was released last week I was really keen to get it working for myself. There are lots of guides out there with somewhat contradictory advice so here are the exact steps I took to get it working on my Apple M1 Max powered 2021 MacBook Pro.

-

Install Anaconda from https://www.anaconda.com/

-

Clone https://github.com/einanao/stable-diffusion and checkout the

apple-siliconbranch.

$ git clone https://github.com/einanao/stable-diffusion

Cloning into 'stable-diffusion'...

remote: Enumerating objects: 284, done.

remote: Total 284 (delta 0), reused 0 (delta 0), pack-reused 284

Receiving objects: 100% (284/284), 42.32 MiB | 1.81 MiB/s, done.

Resolving deltas: 100% (94/94), done.

$ cd stable-diffusion

$ git checkout apple-silicon

Branch 'apple-silicon' set up to track remote branch 'apple-silicon' from 'origin'.

Switched to a new branch 'apple-silicon'

-

Download the

sd-v1-4.ckptmodel weights here. -

Put that file in

models/ldm/stable-diffusion-v1

$ mkdir -p models/ldm/stable-diffusion-v1

$ cp ~/Downloads/sd-v1-4.ckpt models/ldm/stable-diffusion-v1/model.ckpt

- Edit the

environment.yamlfile to include a missing dependency onkornia

...

- pip:

- albumentations

+ - kornia

- opencv-python

- pudb

...

- Use anaconda to install the environment

$ conda env create -f environment.yaml

- Activate the environment

$ conda activate ldm

- Set an environment variable so that MPS falls back to the CPU.

$ export PYTORCH_ENABLE_MPS_FALLBACK=1

- Start generating images!

$ python scripts/txt2img.py --prompt "A young boy, in profile, wearing navy shorts and a green shirt stares into a turquoise swimming pool in California, trees and sea in the background. High quality, acrylic paint by David Hockney" --plms --n_samples=1 --n_rows=1 --n_iter=1 --seed 1805504472

Update - how to get img2img.py working.

Apply this diff (which I wrote myself so disclaimer: it may not be the best way)

diff --git a/ldm/models/diffusion/ddim.py b/ldm/models/diffusion/ddim.py

index fb31215..880c3ae 100644

--- a/ldm/models/diffusion/ddim.py

+++ b/ldm/models/diffusion/ddim.py

@@ -17,9 +17,10 @@ class DDIMSampler(object):

self.schedule = schedule

def register_buffer(self, name, attr):

+ device = torch.device("cuda") if torch.cuda.is_available() else torch.device("mps")

if type(attr) == torch.Tensor:

- if attr.device != torch.device("cuda"):

- attr = attr.to(torch.device("cuda"))

+ if attr.device != device:

+ attr = attr.type(torch.float32).to(device).contiguous() #attr.to(device)

setattr(self, name, attr)

def make_schedule(self, ddim_num_steps, ddim_discretize="uniform", ddim_eta=0., verbose=True):

diff --git a/scripts/img2img.py b/scripts/img2img.py

index 421e215..8669199 100644

--- a/scripts/img2img.py

+++ b/scripts/img2img.py

@@ -40,7 +40,7 @@ def load_model_from_config(config, ckpt, verbose=False):

print("unexpected keys:")

print(u)

- model.cuda()

+ model.to(device='mps')

model.eval()

return model

@@ -199,11 +199,11 @@ def main():

config = OmegaConf.load(f"{opt.config}")

model = load_model_from_config(config, f"{opt.ckpt}")

- device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

+ device = torch.device("cuda") if torch.cuda.is_available() else torch.device("mps")

model = model.to(device)

Run img2img.py like this

python scripts/img2img.py --init-img inputs/1.png --prompt "A young boy, in profile, wearing navy shorts and a green shirt stares into a turquoise swimming pool in California, trees and sea in the background. High quality, acrylic paint by David Hockney" --n_samples 1 --strength 0.8

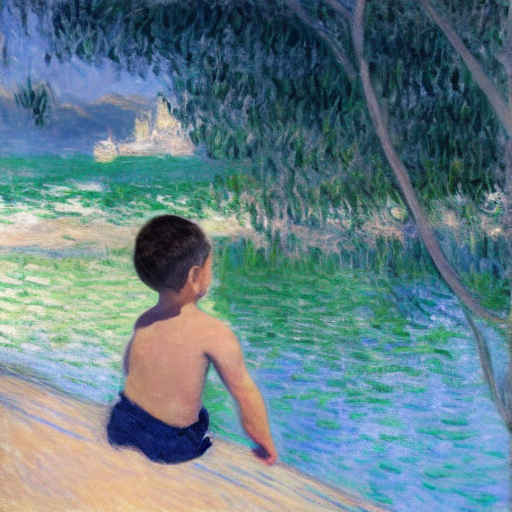

Here’s a cool example:

First, an original I painted (cropped and resized to 512x512)

img2img with the prompt:

A young boy, in profile, wearing navy shorts and a green shirt stares into a turquoise swimming pool in California, trees and sea in the background. High quality, oil painting by Monet